Bad Google Indexing "Secure" Pages...

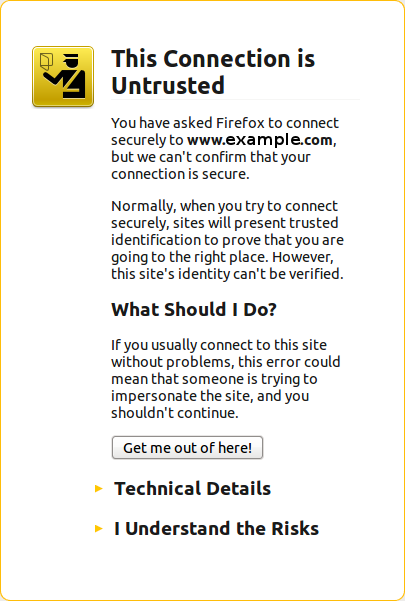

About a week ago I got a customer who started having their website appear in Google with HTTPS (the secure version of the site.) The pages are served securily, but it uses our website certificate so you get a big bad error saying that everything is broken and if you proceed you'll know what hell is like.

The fact is that this customer never had a secure certificate. In other words, there is no reason for the site to have been referenced with HTTPS unless someone typed a link to their site and inadvertendly entered https://... instead of http://...

The fact is that this customer never had a secure certificate. In other words, there is no reason for the site to have been referenced with HTTPS unless someone typed a link to their site and inadvertendly entered https://... instead of http://...

I checked a few of the pages where there is a reference to the customer website and all of them had the correct protocol. Note that you can find such links using Google and other search engines. In Google, enter "link:" followed by the domain name of the website.

To resolve the issue, I went to Google Mastertools and added both websites. The HTTP version as well as the HTTPS version. Both appeared and the mastertools gave me some information about each site. I could see that the HTTPS version was all there, which in a way was annoying.

In order to prevent any further secure protocol pages from appearing in the index, I changed the Apache settings to include a redirect. If you are on the secure page of that domain trying to read the robots.txt file, then I return a robots.txt that forbids you from indexing anything on that website.

The robots.txt looks like this (very simple!):

# robots.txt for websites that are to not be indexed. User-agent: * Disallow: /

Google does respect the robots.txt file so it will ignore all the pages in the secure version of the site that we do not want it to index.

Now there is the code I used in Apache to redirect to the please do not index robots.txt file:

RewriteCond %{HTTP_HOST} =www.example.com

RewriteCond %{HTTPS} on

RewriteCond %{REQUEST_URI} ^/robots.txt$

RewriteRule .* %{DOCUMENT_ROOT}/noindex-robots.txt [L,T=text/plain]

Next, I waited a few days. Now I can see that this new robots.txt file was read by Google for the HTTPS version but not the HTTP version. So I think this will work. I'm not 100% sure that they will really be capable of distinguishing both cases properly though. We'll have to wait a little longer to see what happens.